What is Audio

Analog to Digital Conversion

Sample Rate

Getting Deeper into Sample Rate

The common sample rate of 44.1 kHz was chosen primarily for compatibility with early audio technologies and is rooted in both technical and practical reasons:

- According to the Nyquist theorem, the sample rate must be at least twice the highest frequency in the audio signal to accurately reproduce it without aliasing (Example in the picture).

- Humans typically hear frequencies up to 20 kHz, so a minimum sample rate of 40 kHz is needed.

- 44.1 kHz provides a safe margin above 40 kHz to account for filter imperfections during analog-to-digital and digital-to-analog conversion.

What is Frequency, And How Does it Relate to Sample Rate?

When talking about sound, frequency, which is part of waves (my brain already hurts), refers to how fast the sound wave vibrates. Higher frequencies are higher-pitched sounds (like a whistle ~ spiky wave), and lower frequencies are deeper sounds (like a bass drum ~ small wave). The human hearing range typically spans from 20 Hz (low bass) to 20,000 Hz (20 kHz) (high pitch).

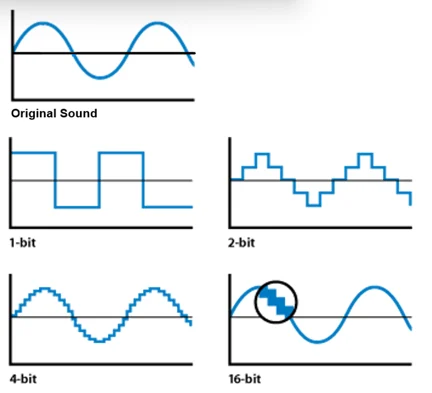

Now, when we convert sound into digital form, we need to take samples of the sound wave at regular intervals. The more samples we take, the more accurate the digital version will be. The sample rate determines how often we take those samples.

Twice the frequency rule: For the digital version to accurately represent the wave without distortion, the sample rate must be at least twice the highest frequency we want to capture. If you try to sample fewer times than that, the digital version can "mishear" the sound and create weird distortions.

So, if you're capturing sounds with a frequency up to 20 kHz (the highest sound humans can hear), your sample rate should be at least 40 kHz to avoid distortions. This is why 44.1 kHz (CD quality) is a common standard.

But Awad, what if the highest frequency of the sound is 80k? well, first of all, you can't hear it, and second, you won't use it unless you're a scientist who is working with ultrasounds, then you need to sample the sound at a 160k sample rate or even more to get an accurate representation when converted to digital.

Now you have a much deeper understanding of the sample rate. Moreover, 44.1 kHz was chosen because it satisfied human auditory limits (Nyquist theorem), was practical with early video technology, and balanced quality with data storage constraints. It remains a standard due to its widespread adoption and backward compatibility with existing systems.

|

| If you use a low sample rate on the high-frequency waves (supposing it is audio), then you're gonna lose a lot of info, think about it, a moment you're down the next moment you're at the top. source |

|

| An Example of how Analogue waves are sampled into digital waves, the lower the sample rate the higher the Aliasing, the source |

Bit Depth

Bit Rate

Relationship Between Sample Rate, Bit Depth, and Bit Rate

Sources:

- https://www.techtarget.com/whatis/definition/sound-wave

- https://routenote.com/blog/what-is-sample-rate-in-audio/

- https://www.waveroom.com/blog/bit-rate-vs-sample-rate-vs-bit-depth/

- https://www.reddit.com/r/musicproduction/comments/1dyfj97/bit_depth_and_sample_rate/

- https://splice.com/blog/analog-to-digital-conversion/

- https://www.headphonesty.com/2019/07/sample-rate-bit-depth-bit-rate/

- https://svantek.com/academy/sound-wave/

- https://restream.io/learn/what-is/audio-bitrate/

- https://www.sciencefocus.com/science/how-do-we-convert-audio-from-analogue-to-digital-and-back

- https://www.lalal.ai/blog/sample-rate-bit-depth-explained/

0 comments:

Post a Comment